Code review during the pub process

At Arcadia, one of our central tenets is that our science should be maximally useful. We believe that useful computing is innovative, usable, reproducible, and timely.

Increase reproducibility and usability of the computational products we put out by making sure:

- Our code is readable and well-documented.

- The software (and versions!) and resources we used are documented.

- It's clear how data inputs and outputs relate to the code.

Code review FAQ and discussion

Question: Is code review really necessary for things like figures, or things that just use existing methods and don't write new analysis code?

Thoughts: We think so! For command line tools, the parameters you specify or the versions of tools you use can make a pretty big impact on results, so writing these things down explicity can be really helpful for interpretation of the results or for comparing to new results generated by the same tools. It's also super helpful for other people to see what you ran so they can use it as a recipe for a reasonable way to analyze their data -- think of it like a tutorial in disguise. For figures, we think filtering steps or transformations are important to capture. Even if you don't do any of these steps, your figure code is also like a tutorial in disguise – since we provide our input data and pubs openly, providing the code use to make a plot allows other people to interface with our results or to accomplish something similar to what we did. We get that coding a pub-ready figure is a big task – it's totally fine to edit titles, labels, and fonts in Illustrator/Photoshop after making the plot (just be careful not to edit the data!).

Imagine a world in which you pick up a manuscript that has some really interesting new result. Wouldn't it be cool if you could you could access the code for the figures inline, and recreate them all, but zooming in on the results you specifically care about? Or even cooler – what if you could follow the exact recipe documented in the manuscript for your data, and then quickly add your observations to the plots in the original manuscript? Executable manuscripts are a thing, but they're hard for reasons beyond code and data availability. Even still, providing the code we use – including for our figures – is both a key component to achieving these products and a suitable minimum replacement when the full thing isn't possible.

Question: Could code review happen after version 1 of the pub?

Thoughts: The goal of code review in the pub release process is to make sure someone inside or outside of Arcadia could pick up the code you used and with minimal effort, reproduce the results you got and reuse the code you wrote. We think that code review prior to the release of the first version of a pub will increase the usefulness of the computational products we're releasing to the world.

Our goal is to have the code review component of the pub release process become a formality; we want code review to happen quickly after a unit of code is written (a new function, a new analysis notebook, a new figure) so that the feedback you get is actually useful and doesn't slow you down. If we can get to that point, the checks in the pub release cycle will be super quick. To make this process faster and to reduce the heat around version 1 pub releases, we've created a checklist of the five things that are needed to approve code associated with the first release of a pub. Any other suggestions you get are just that – suggestions – and can be punted to later pub releases or ignored.

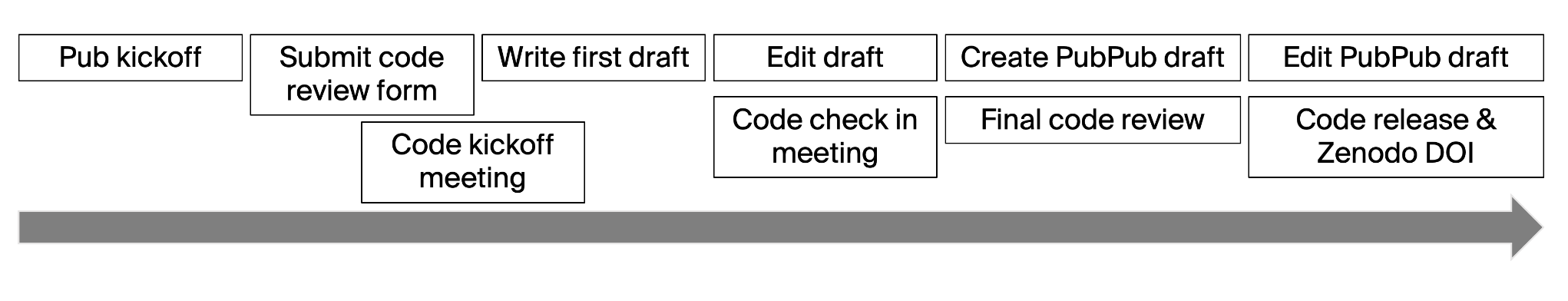

Overview of the pub process and how it relates to code review

Check out the What to expect from code review during the pub process Notion page for an up-to-date guide through this process.

Minimums for passing code review: a checklist

- All software packages and their versions are documented.

- Data inputs and outputs are documented.

- Only relative paths (not absolute paths) are used and the relative paths reference files in the repo or there is documentation for how to get the file.

- Enough comments and/or documentation are provided so it's clear what the code does.

- DOI for pub is linked in the README.

Most common pain points with code review

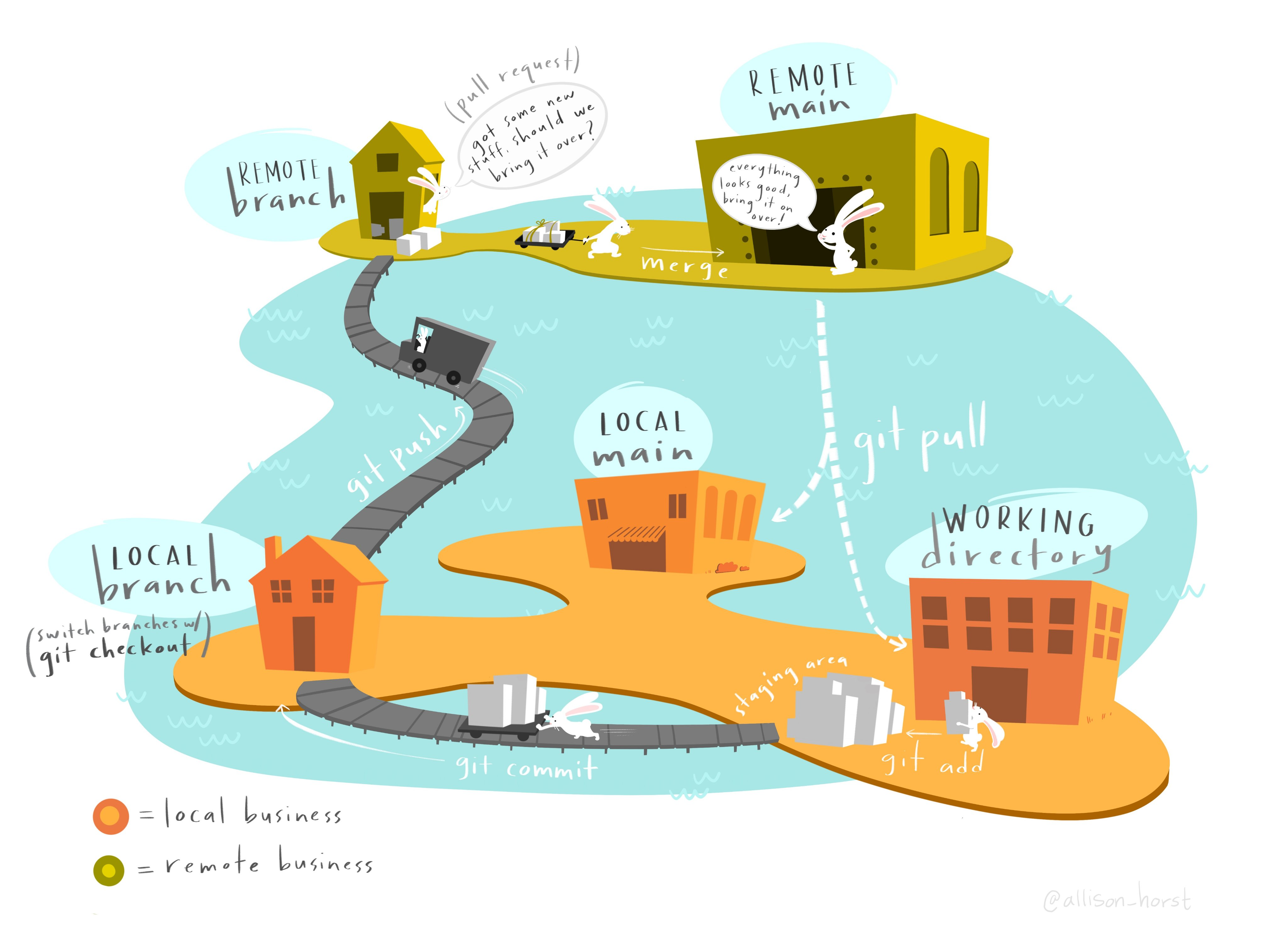

- Pushing to

mainormasterinstead of to a branch and opening a pull request - Requesting review of the entire repo instead of requesting periodic reviews

GitHub refresher

- Make a new folder wherever your GitHub repos live. Name it

tmp-yourinitials-aug. - Download this file as our pretend code that we've been working on. Save it in your new folder.

- Create an empty, private repository in the Arcadia-Science organization. Use the same name as the local folder we made.

- Copy and paste the instructions given to you on the screen.

- Create and checkout a new branch with

git checkout -b ter/init-pr. Name ityourinitials/init-pr. - Use

git add,git commit, andgit pushto add our file to a branch in your new repository. - Open a pull request and explain your changes.

- Convert your PR to a draft.

- Convert it back to "ready for review" and request review from someone.

Resources to help you thrive through code review

- Your code review partner, AUG office hours, and the #software-questions channel

- Arcadia software handbook

- GitHub workshop and recording

Future directions

We really want the code review process to be as painless as possible. When we identify things that people do over and over again – building a binder from an R repo, writing a Nextflow pipeline – we can build templates that you can clone at the start of a project and use to cut down on repetitive work.

If you have any other ideas of how we can help, we're always happy to try new things!